Cameras are devices for capturing photographic images. A camera is basically a box with an opening in one wall that lets light enter the box and form an image on the opposite wall. The earliest such “cameras” were what are now known as camera obscuras, which are closed rooms with a small hole in one wall. The name “camera obscura” comes from Latin: “camera” meaning “room” and “obscura” meaning “dark”. (Which is incidentally why in English “camera” refers to a photographic device, while in Italian “camera” means a room.)

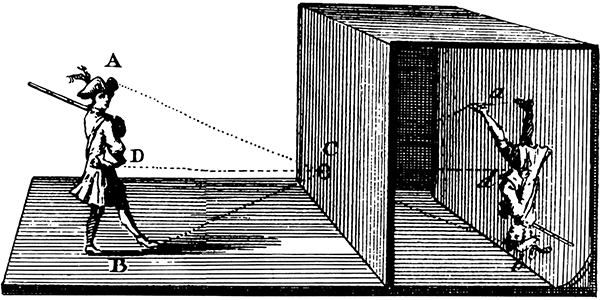

A camera obscura works on the principle that light travels in straight lines. How it forms an image is easiest to see with reference to a diagram:

In the diagram, the room on the right is enclosed and light can only enter through the hole C. Light from the head region A of a person standing outside enters the hole C, travelling in a straight line, until it hits the far wall of the room near the floor. Light from the person’s feet B travels through the hole C and ends up hitting the far wall near the ceiling. Light from the person’s torso D hits the far wall somewhere in between. We can see that all of the light from the person that enters through the hole C ends up projected on the far wall in such a way that it creates an image of the person, upside down. The image is faint, so the room needs to be dark in order to see it.

If you have a modern photographic camera, you can expose it for a long time to capture a photo of the faint projected image inside the room (which is upside down).

The hole in the wall needs to be small to keep the image reasonably sharp. If the hole is large, the rays of light from a single point in the scene outside project to multiple points on the far wall, making the image blurry – the larger the hole, the brighter the image, but blurrier it is. You can overcome this by placing a lens in the hole, which focuses the incoming light back down to a sharper focus on the wall.

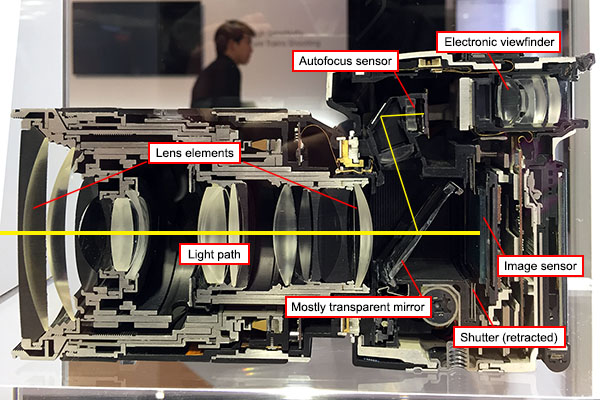

A photographic camera is essentially a small, portable camera obscura, using a lens to focus an image of the outside world onto the inside of the back of the camera. The critical difference is that where the image forms on the back wall, there is some sort of light-sensitive device that records the pattern of light, shadow, and colour. The first cameras used light-sensitive chemicals, coated onto a flat surface. The light causes chemical reactions that change physical properties of the chemicals, such as hardness or colour. Various processes can then be used to convert the chemically coated surface into an image, that more or less resembles the scene that was projected into the camera. Modern chemical photography uses film as the chemical support medium, but glass was popular in the past and is still used for specialty purposes today.

More recently, photographic film has been largely displaced by digital electronic light sensors. Sensor manufacturers make silicon chips that contain millions of tiny individual light sensors arranged in a rectangular grid pattern. Each one records the amount of light that hits it, and that information is recorded as one pixel in a digital image file – the file holding millions of pixels that encode the image.

One important parameter in photography is the exposure time (also known as “shutter speed”). The hole where the light enters is covered by a shutter, which opens when you press the camera button, and closes a little bit later, the amount of time controlled by the camera settings. The longer you leave open the shutter, the more light can be collected and the brighter the resulting image is. In bright sunlight you might only need to expose the camera for a thousandth of a second or less. In dimmer conditions, such as indoors, or at night, you need to leave the shutter open for longer, sometimes up to several seconds to make a satisfactory image.

A problem is that people are not good at holding a camera still for more than a fraction of a second. Our hands shake by small amounts which, while insignificant for most things, are large enough to cause a long exposure photograph to be blurry because the camera points in slightly different directions during the exposure. Photographers use a rule of thumb to determine the longest shutter speed that can safely be used: For a standard 35 mm SLR camera, take the reciprocal of the focal length of the lens in millimetres, and that is the longest usable shutter speed for hand-held photography. For example, when shooting with a 50 mm lens, your exposure should be 1/50 second or less to avoid blur caused by hand shake. Longer exposures will tend to be blurry.

The traditional solution has been to use a tripod to hold the camera still while taking a photo, but most people don’t want to carry around a tripod. Since the mid-1990s, another solution has become available: image stabilisation. Image stabilisation uses technology to mitigate or undo the effects of hand shake during image capture. There are two types of image stabilisation:

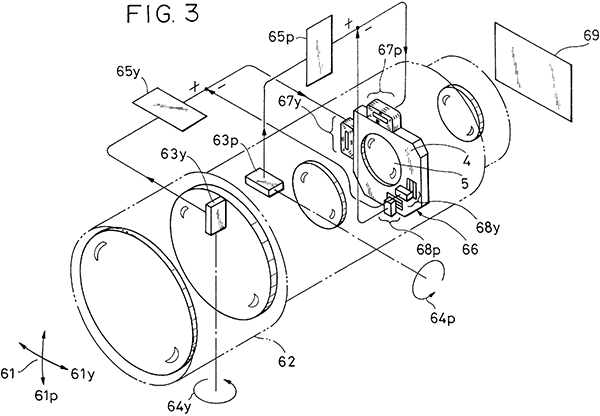

1. Optical image stabilisation was the first type invented. The basic principle is to move certain optical components of the camera to compensate for the shaking of the camera body, maintaining the image on the same location on the sensor. Gyroscopes are used to measure the tilting of the camera body caused by hand shake, and servo motors physically move the lens elements or the image sensor (or both) to compensate. The motions are very small, but crucial, because the size of a pixel on a modern camera sensor is only a few micrometres, so if the image moves more than a few micrometres it will become blurry.

2. Digital image stabilisation is a newer technology, which relies on image processing, rather than moving physical components in the camera. Digital image processing can go some way to remove the blur from an image, but this is never a perfect process because blurring loses some of the information irretrievably. Another approach is to capture multiple shorter exposure images and combine them after exposure. This produces a composite longer exposure, but each sub-image can be shifted slightly to compensate for any motion of the camera before adding them together. Although digital image stabilisation is fascinating, for this article we are actually concerned with optical image stabilisation, so I’ll say no more about digital.

Early optical image stabilisation hardware could stabilise an image by about 2 to 3 stops of exposure. A “stop” is a term referring to an increase or decrease in exposure by a factor of 2. With 3 stops of image stabilisation, you can safely increase your exposure by a factor of 23 = 8. So if using a 50 mm lens, rather than need an exposure of 1/50 second or less, you can get away with about 1/6 second or less, a significant improvement.

Newer technology has improved optical image stabilisation to about 6.5 stops. This gives a factor of 26.5 = 91 times improvement, so that 1/50 second exposure can now be stretched to almost 2 seconds without blurring. Will we soon see further improvements giving even more stops of optical stabilisation?

Interestingly, the answer is no. At least not without a fundamentally different technology. According to an interview with Setsuya Kataoka, Deputy Division Manager of the Imaging Product Development Division of Olympus Corporation, 6.5 stops is the theoretical upper limit of gyroscope-based optical image stabilisation. Why? In his words[2]:

6.5 stops is actually a theoretical limitation at the moment due to rotation of the earth interfering with gyro sensors.

Wait, what?

This is a professional camera engineer, saying that it’s not possible to further improve camera image stabilisation technology because of the rotation of the Earth. Let’s examine why that might be.

As calculated above, when we’re in the realm of 6.5 stops of image stabilisation, a typical exposure is going to be of the order of a second or so. The gyroscopes inside the camera are attempting to keep the camera’s optical system effectively stationary, compensating for the photographer’s shaky hands. However, in one second the Earth rotates by an angle of 0.0042° (equal to 360° divided by the sidereal rotation period of the Earth, 86164 seconds). And gyroscopes hold their position in an inertial frame, not in the rotating frame of the Earth. So if the camera is optically locked to the angle of the gyroscope at the start of the exposure, one second later it will be out by an angle of 0.0042°. So what?

Well, a typical digital camera sensor contains pixels of the order of 5 μm across. With a focal length of 50 mm, a pixel subtends an angle of 5/50000×(180/π) = 0.006°. That’s very close to the same angle. In fact if we change to a focal length of 70 mm (roughly the border between a standard and telephoto lens, so very reasonable for consumer cameras), the angles come out virtually the same.

What this means is that if we take a 1 second exposure with a 70 mm lens (or a 2 second exposure with a 35 mm lens, and so on), with an optically stabilised camera system that perfectly locks onto a gyroscopic stabilisation system, the rotation of the Earth will cause the image to drift by a full pixel on the image sensor. In other words, the image will become blurred. This theoretical limit to the performance of optical image stabilisation, as conceded by professional camera engineers, demonstrates that the Earth is rotating once per day.

To tie this in to our theme of comparing to a flat Earth, I’ll concede that this current limitation would also occur if the flat Earth rotated once per day. However, the majority of flat Earth models deny that the Earth rotates, preferring the cycle of day and night to be generated by the motion of a relatively small, near sun. The current engineering limitations of camera optical image stabilisation rule out the non-rotating flat Earth model.

You could in theory compensate for the angular error caused by Earth rotation, but to do that you’d need to know which direction your camera was pointing relative to the Earth’s rotation axis. Photographers hold their cameras in all sorts of orientations, so you can’t assume this; you need to know both the direction of gravity relative to the camera, and your latitude. There are devices which measure these (accelerometers and GPS), so maybe some day soon camera engineers will include data from these to further improve image stabilisation. At that point, the technology will rely on the fact that the Earth is spherical – because the orientation of gravity relative to the rotation axis changes with latitude, whereas on a rotating flat Earth gravity is always at a constant angle to the rotation axis (parallel to it in the simple case of the flat Earth spinning like a CD).

And the fact that your future camera can perform 7+ stops of image stabilisation will depend on the fact that the Earth is a globe.

References:

[1] Toyoda, Y. “Image stabilizer”. US Patent 6064827, filed 1998-05-12, granted 2000-05-16. https://pdfpiw.uspto.gov/.piw?docid=06064827

[2] Westlake, Andy. “Exclusive interview: Setsuya Kataoka of Olympus”. Amateur Photographer, 2016. https://www.amateurphotographer.co.uk/latest/photo-news/exclusive-interview-setsuya-kataoka-olympus-95731 (accessed 2019-09-18).

This is by far the most unexpected and fascinating one so far. And there’s still 73 more to come?!

I knew my camera lenses had an “image stabilisation” switch, but that had always confused me because I had assumed that it was an entirely digital trick – in which case the system would exist in the camera body and not the lens (presumably the glossed-over digital image stabilisation mentioned here does actually exist in the camera body, assuming DSLRs use it at all).

It’s amazing just how deeply our globe-shaped world can have influence on everyday devices. I certainly never expected to see camera lenses on this list!

There is an image stabilization system that is in the body (called In Body Stabilization or IBS, cleverly enough). But that works be moving the sensor, rather than an element of the lens. It should still be considered an optical system.

IBIS, In Body IMAGE Stabilization, I meant.

I agree with Daniel and Christopher: this is the most surprising proof to date for me as well. Fascinating.

Wouldn’t that 6.5 stops limit be lowered by higher image sensor resolutions (greater density, more pixels per square centimeter)? Did the switch to 4K make optical stabilization harder?

Etymology digression. You wrote, ‘The name “camera obscura” comes from Latin: “camera” meaning “room” and “obscura” meaning “dark”. (Which is incidentally why in English “camera” refers to a photographic device, while in Italian “camera” means a room.)’

English “chamber” is also from Latin “camera” (via Norman French, I believe, which is where the “ch-” phoneme comes in). English has a weird history, where it can inherit different words from the same root via different ancestors, e. g. “shirt” and “skirt” were in origin the same proto-Germanic word for a garment. “Shirt” is how Old English ended up using it, “skirt” came in via Old Norse. (No Old English word had “sk-” at the beginning. Cognates of English “sh-” words start with “sk-” in Old Norse.)

Actually the pixel size of around 5 micrometres is close to the limiting optical resolution of a standard sized camera system, due to diffraction effects. If you decrease the pixel size much more, you don’t actually increase sharpness of the image – you get more pixels, but the image on those pixels is blurred by diffraction to about the same resolution anyway. So it’s a case of diminishing returns.

In a sense moving to the current higher resolution sensors did make image stabilisation more difficult, because it’s easier to see the effects of the blurring by enlarging the pixels. The same amount of blurring is present if you have fewer pixels, but you’re undersampling the image then, so it’s harder to notice.

Following up on chamber and etymology: the Latin root of “obscure” means darkened as well as hidden; and, while “chamber” can be used to mean a room, it can also mean any enclosed space (e.g. the chambers of the heart).

Thus we could translate “camera obscura” as “darkened chamber” instead of “hidden room”; and, indeed, the space between lens and photosensor in a photographic camera is a darkened chamber (if only because light only enters it from the lens, rather than all directions, so it is darker than the world outside, even if it isn’t shut up behind a shutter for all but the moment of the exposure).

I enjoy your articles and the mention of the camera obscura reminded me of when Disney World had one in the Kodak store. It projected onto a table (I understand that some early British subs had such an arrangement) and was pretty awesome on a clear, sunny day.

When I looked at the projected street scene, I felt like I was in the serial “The Phantom Empire” where the Queen of Murania would spy on “the foolish surface dwellers”!

I know I know. This blog isn’t actually about proving flat Earthers wrong, but it’s just so hard to help myself sometimes.

Camera hardware engineers. Yet another group to add to the people on the inside of the globe earth conspiracy. There are so many. It’s amazing that they can all remain completely faithful.